The opening keynote for PASS was delivered by Ted Kummert, the vice president of the data and storage division at Microsoft. He gave us a look at some things that are in development at the moment and are expected to be released before the next major version of SQL.

The first of these is Kilimanjaro. This is not modifications or fixes to the current version of SQL, rather it’s a managed self-service tool for BI as well as managability improvementys for the higher end setups.. They expect to deliver this sometime in the first half of 2010.

One of the things included in Kilimanjaro is a massive improvement in multi-server management in Management Studio. It adds a concept called the SQL Fabric, which contaions a number of servers with a number of applications. The servers can be managed through the fabric control server, which can also provide an overview of how all the servers within the fabric are running, whether they are overloaded or have available capacity. the fabric controller also stores historical trends for the servers that it controls.

Deployments to these servers will be controled by a policy. The application developer sets up a dac (DataTier Application Component) that contains all of the pieces necessary for the database and he can set policies that affect where the app can be deployed. the DBA, when he takes the DAC to deploy it, can addadditional policies that affect the reqjuired settings on the server, the reqired resources and other properties, and the fabric controll server can then select which servers are appropriate for that application.

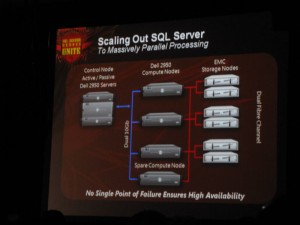

The second enhancement, called Madison, is a data warehouse scale out technology, based on Datallegro (recently purchased by Microsoft). Madison puts a layer on top of SQL that allows multiple servers look like a single instance. From what I saw, it’s more for the star-schema databases, it’s intergrated with the BI tools and allows those databases to scale to 100’s of TB using standard hardware.

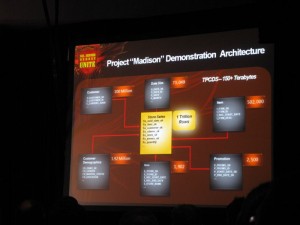

One of the developers did a demo, running a report against a 150 TB data warehouse with a fact table that contained just over a trillion rows. The data was spread out over 24 servers each with 8 processing cores. The report completed in under 15 seconds.

Some photos from the keynote:

The hardware used in the demo of the 150TB datawarehouse

The Schema of the massive warehouse

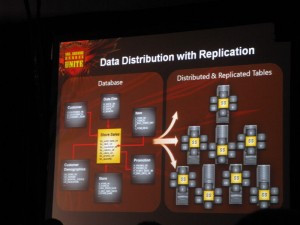

The schema broken up over all of the servers.

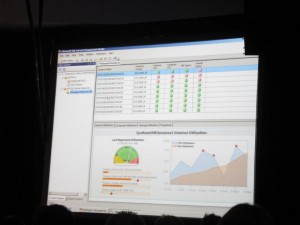

The servers that the warehouse is spread over, before the report was run. All mostly idle.

The same servers with the report running. Vertical bars show CPU usage of each core, horizontal bar below shows IOs.

The fabric dashboard showing the overall health of the servers controlled by it.

A screen showing the health, current and historical, of a server within the fabric.