Why would you want to name a transaction?

Something that i keep seeing in documentation, in forum code and in real systems is transactions that have names

BEGIN TRANSACTION VeryImportantProcess <do stuff> COMMIT VeryImportantProcess

Now the question came up on the forums a while back as to what the point of this is. Why would you name a transaction, what effect does it have on behaviour and how is it treated internally?

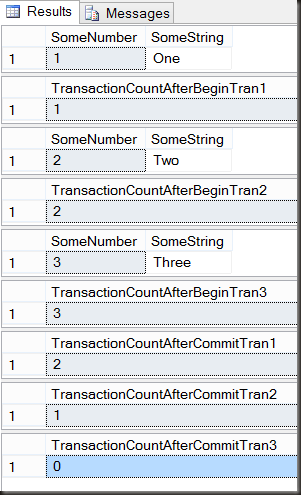

In the first post in this series I had a look at nested transactions and in that post we saw that nested transactions don’t have any effect on how the transactions are treated. The BEGIN TRANSACTION statement isn’t even logged if there’s already an open transaction in that session and a ROLLBACK still rolls everything back (unless it’s specifically rolling back to a savepoint)

First question then regarding naming of transactions is whether it has any effect at all on that behaviour.

CREATE TABLE TestTrans (

ID int,

InsertDate DATETIME

);

BEGIN TRANSACTION OuterTran

INSERT INTO TestTrans (ID, InsertDate) VALUES (1,GETDATE())

BEGIN TRANSACTION InnerTran

INSERT INTO TestTrans (ID, InsertDate) VALUES (2,GETDATE())

COMMIT TRANSACTION InnerTran

ROLLBACK TRANSACTION OuterTran

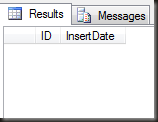

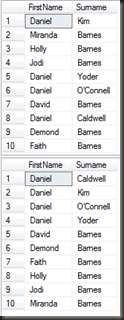

SELECT * FROM TestTrans

Clearly none. Even with all the transactions named, a commit of a named transaction did not commit anything until the transaction nest level reached 0. A rollback still rolls back everything.

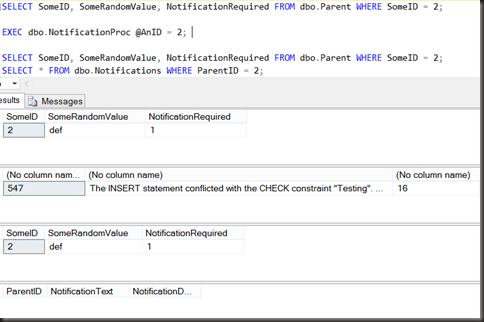

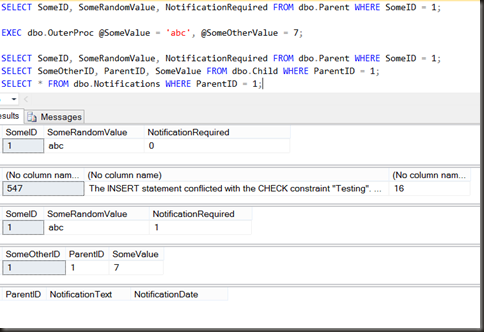

Ok, so maybe there’s some internal usage for a transaction’s name. Let’s have a look at the transaction log to see.

CHECKPOINT;

BEGIN TRANSACTION OuterTran

INSERT INTO TestTrans (ID, InsertDate) VALUES (1,GETDATE())

BEGIN TRANSACTION InnerTran

INSERT INTO TestTrans (ID, InsertDate) VALUES (2,GETDATE())

COMMIT TRANSACTION InnerTran

ROLLBACK TRANSACTION OuterTran

SELECT * FROM fn_DBLog(NULL, NULL)

The outer transaction’s name appears in the log, replacing what was ‘user_transaction’ previously, but other than that there’s no differences. The inner transaction still doesn’t appear anywhere in the log, neither does the inner commit, and the rollback doesn’t reference the transaction name anywhere.

So what conclusion can we come to here? Pretty much that naming of transactions has one real use, as a form of documentation. Instead of putting a comment above an BEGIN TRANSACTION we can give the transaction a name that indicates what the transaction does, That’s about the only real use.

With two exceptions.

There’s a seldom used option on a BEGIN TRANACTION, an optional WITH clause, Using that along with the transaction name can have some uses in exceptional circumstances.

BEGIN TRANSACTION VeryImportantProcess WITH MARK <do stuff> COMMIT TRANSACTION VeryImportantProcess

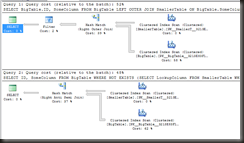

Now if we run an example of that and look into the log, there’s something different there. Interestingly, the difference isn’t on the BEGIN_XACT, it’s on the COMMIT_XACT log record.

The question still stands though, why do this? Why name a transaction and put a mark of that into the transaction log. What benefit does it give?

The mark can be used with a rather poorly known and seldom used pair of options on the restore command (when restoring transaction log backups)

- WITH STOPAFTERMARK

- WITH STOPBEFOREMARK

These two, similar to the better known STOPAT can be used to stop a transaction log restore part way through a log backup. Unlike STOPAT, they don’t take a time, but rather take a string, a name that was used for a marked transaction name.

Now to be honest there’s not all that much use for this. If a user drops a table or otherwise messes data up requiring a restore to the point before that happened they’re not going to be nice and run that accidental data mess up in a named, marked transaction.

Where it can be useful is when the transaction crossed multiple databases and/or multiple servers. The log mark appears in all of them and can therefore be used to restore multiple databases to a consistent state where that consistent state is not a single point in time.

Still, it’s useful to have in the toolbox just in case some circumstance comes up where it’s needed.

Edit: It was pointed out, both on twitter and in the comments, that adding a name to a transaction reflects in the transaction-related DMVs and potentially makes debugging easier. Absolutely, it can do and it’s a valid reason to name transactions, I’m lumping that in with ‘documentation’ in the discussions here.

There’s another case where naming transactions can affect behaviour and in this case usually in a bad way. I’ll look at in in more detail in part 4 of this series, but for now will just reference the relevant segment in Books Online:

transaction_name Is the name assigned to the transaction on BEGIN TRANSACTION. transaction_name must conform to the rules for identifiers, but only the first 32 characters of the transaction name are used. When nesting transactions, transaction_name must be the name from the outermost BEGIN TRANSACTION statement. transaction_name is always case sensitive, even when the instance of SQL Server is not case sensitive.

The full list is:

- A Mess of Nested Transactions

- Savepoints and conditional transactions

- Why would you want to name a transaction? (This post)

- When naming transactions causes an error