I originally wrote about catch-all queries early in 2009, just as something that I’d seen several times in client code. It turned into the 3rd most popular post ever on my blog.

A lot’s changed since 2009. When I wrote the original post, most production servers were SQL 2005 or SQL 2000. SQL 2008 had been out less than a year and its fix for catch-all queries, the RECOMPILE hint, didn’t even work properly (it had an incorrect results bug in RTM, was pulled in SP1 and fixed in SP2)

As such, my feelings on how to solve the problem with catch-all queries has changed over the years.

Before I get to solutions, let’s start with the root cause of the problem with catch-all queries – plan caching and the need for plans to be safe for reuse.

Let’s take a sample query. I’ll use the same one I used in the original post.

CREATE PROCEDURE SearchHistory

(@Product int = NULL, @OrderID int = NULL, @TransactionType char(1) = NULL, @Qty int = NULL)

AS

SELECT ProductID, ReferenceOrderID, TransactionType, Quantity,

TransactionDate, ActualCost

FROM Production.TransactionHistory

WHERE (ProductID = @Product Or @Product IS NULL)

AND (ReferenceOrderID = @OrderID OR @OrderID Is NULL)

AND (TransactionType = @TransactionType OR @TransactionType Is NULL)

AND (Quantity = @Qty Or @Qty is null)

GO

There are two nonclustered indexes on the TransactionHistory table, one on ProductID, one on ReferenceOrderID and ReferenceLineID.

For the initial discussion, let’s just consider two of the clauses in the WHERE. I’ll leave the other two in the stored proc, but they won’t be used.

WHERE (ProductID = @Product Or @Product IS NULL)

AND (ReferenceOrderID = @OrderID OR @OrderID Is NULL)

We would expect, if the ProductID parameter is passed, to get a seek using the index on ProductID, if the ReferenceOrderID parameter is passed, to get a seek using the index on ReferenceOrderID, and if both are passed, then either an index intersection or a seek on one of the indexes, key lookup and secondary filter for the other, plus, in all cases, a key lookup to fetch the columns for the SELECT.

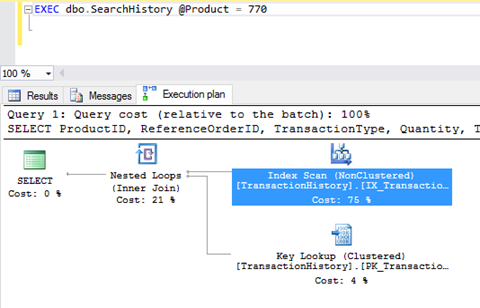

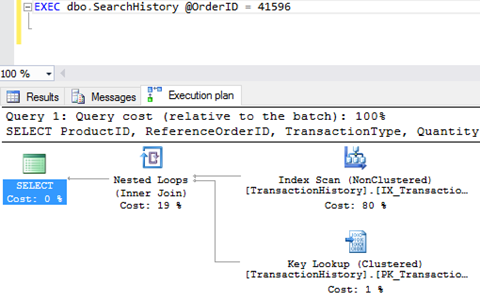

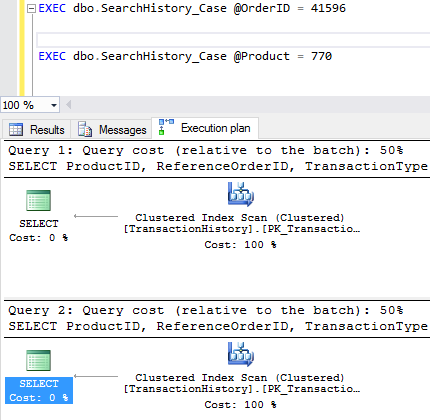

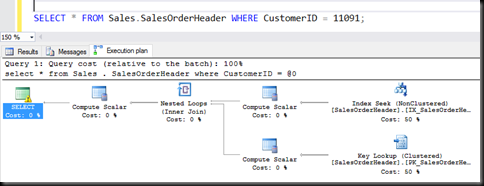

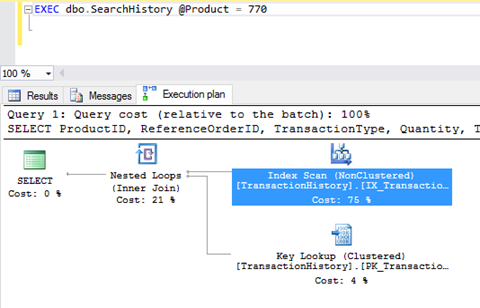

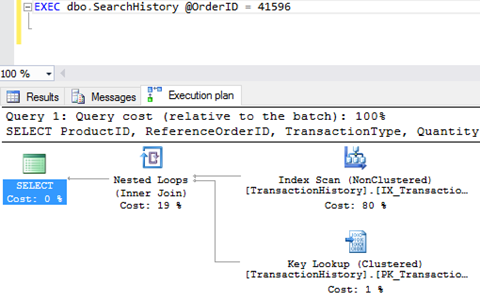

That’s not what we get (I cleared the plan cache before running each of these).

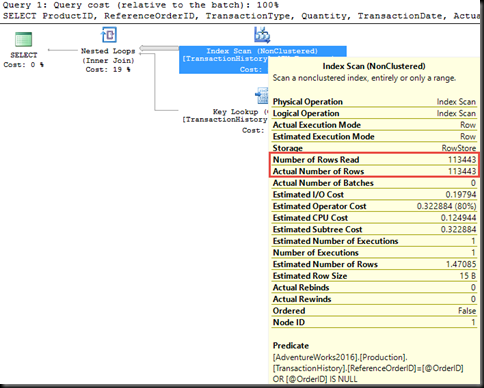

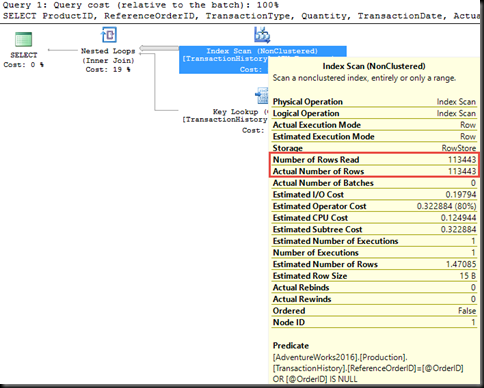

The expected indexes are used, but they’re used for scans not seeks. Why? Let’s just consider the second plan for a bit.

The index aren’t used for seeks, because plans must be safe for reuse. If a plan was generated with an index seek, seeking for ReferenceOrderID = @OrderID, and that plan was cached and reused later when @OrderID was NULL, we’d get incorrect results. ReferenceOrderID = NULL matches no records.

And so we have index scans with the full predicate (ReferenceOrderID = @OrderID OR @OrderID Is NULL) applied after the index is read.

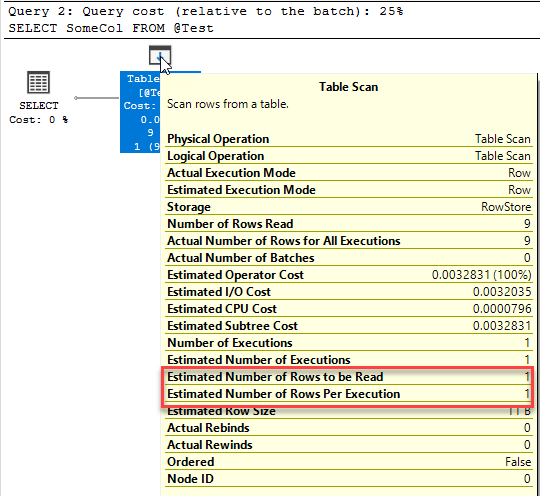

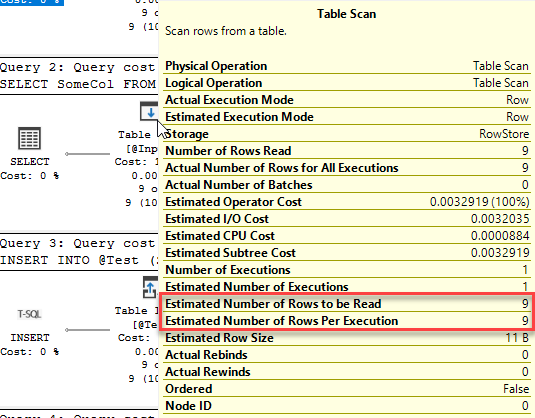

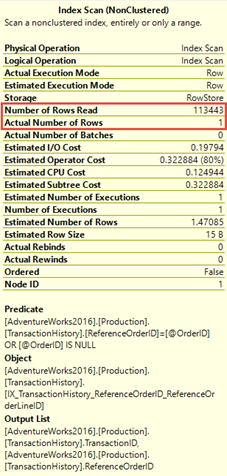

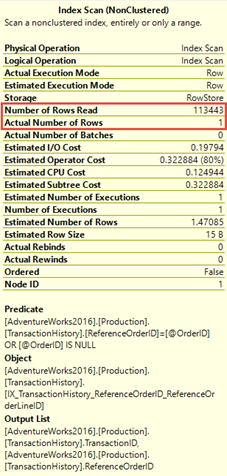

This is not particularly efficient, as the properties on the index seek shows.

The entire index, all 113443 rows were read, to return a single row. Not ideal, but it’s far from the largest problem with this form of query.

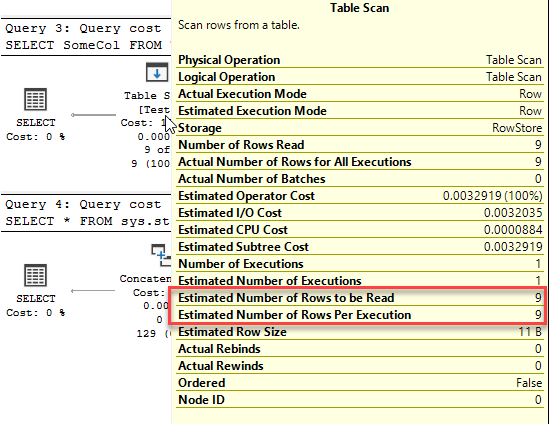

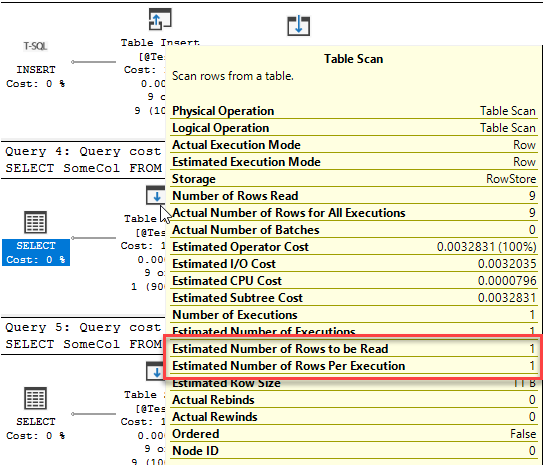

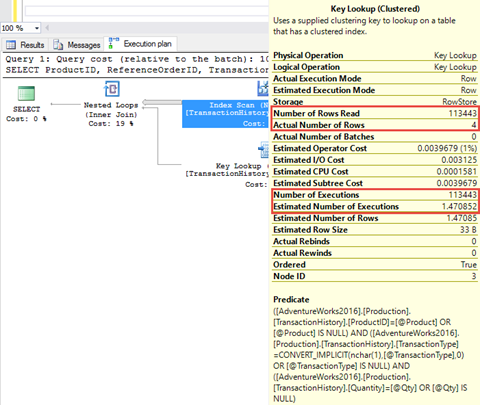

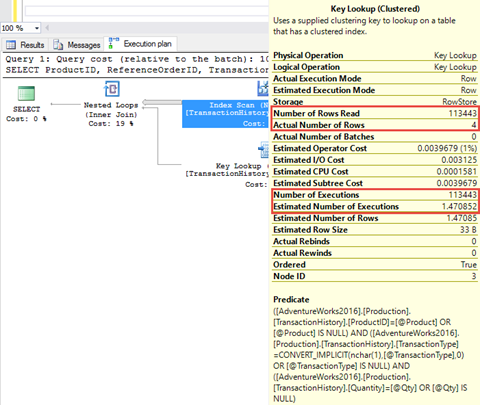

The plan’s got an index scan on the index on ReferenceOrderID, and then a key lookup back to the clustered index. That key lookup has a secondary filter on it, (ProductID = @Product Or @Product IS NULL). The optimiser assumed that a small number of rows would be returned from the index seek on ReferenceOrderID (1.47 to be specific), and hence the key lookup would be cheap, but that’s not going to be the case if the plan is reused with a ProductID passed to it instead of a ReferenceOrderID.

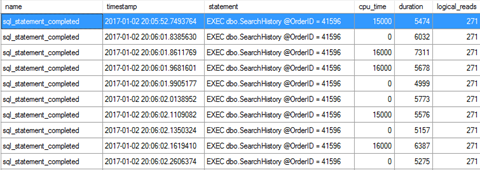

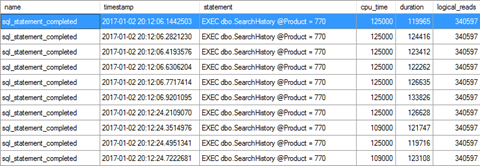

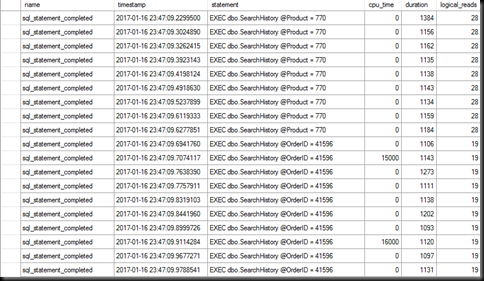

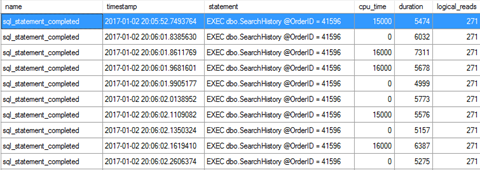

Before we look at that, the performance characteristics for the procedure being called with the ReferenceOrderID parameter are:

The duration and CPU are both in microseconds, making this a very fast query, despite the index scan.

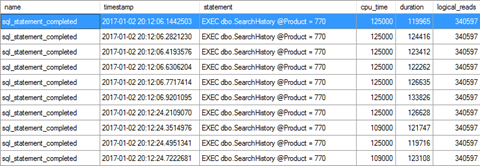

Now, without clearing the plan cache, I’m going to run the procedure with only the ProductID parameter passed.

CPU’s gone from an average of 8ms to around 120ms. Duration has gone from average around 6ms to about 125ms and reads have jumped from 271 (2 MB of data processed) to 340 597 (2.6 GB of data processed)

And this is for a table that has 113k records and a query that returned 4 rows.

The key lookup, which was fine when an OrderID was passed, is not fine when @OrderID is NULL and the index scan returns the entire table.

The plans that the optimiser has come up with for this query form aren’t stable. They’re safe for reuse, they have to be, but performance-wise they’re not stable.

But, maybe it’s just this form of query, there are other ways to write queries with multiple optional parameters.

Let’s try the CASE and COALESCE forms.

CREATE PROCEDURE SearchHistory_Coalesce

(@Product int = NULL, @OrderID int = NULL, @TransactionType char(1) = NULL, @Qty int = NULL)

AS

SELECT ProductID, ReferenceOrderID, TransactionType, Quantity,

TransactionDate, ActualCost

FROM Production.TransactionHistory

WHERE ProductID = COALESCE(@Product, ProductID)

AND ReferenceOrderID = COALESCE(@OrderID, ReferenceOrderID)

AND TransactionType = COALESCE(@TransactionType, TransactionType)

AND Quantity = COALESCE(@Qty, Quantity)

GO

CREATE PROCEDURE SearchHistory_Case

(@Product int = NULL, @OrderID int = NULL, @TransactionType char(1) = NULL, @Qty int = NULL)

AS

SELECT ProductID, ReferenceOrderID, TransactionType, Quantity,

TransactionDate, ActualCost

FROM Production.TransactionHistory

WHERE ProductID = CASE WHEN @Product IS NULL THEN ProductID ELSE @Product END

AND ReferenceOrderID = CASE WHEN @OrderID IS NULL THEN ReferenceOrderID ELSE @OrderID END

AND TransactionType = CASE WHEN @TransactionType IS NULL THEN TransactionType ELSE @TransactionType END

AND Quantity = CASE WHEN @Qty IS NULL THEN Quantity ELSE @Qty END

GO

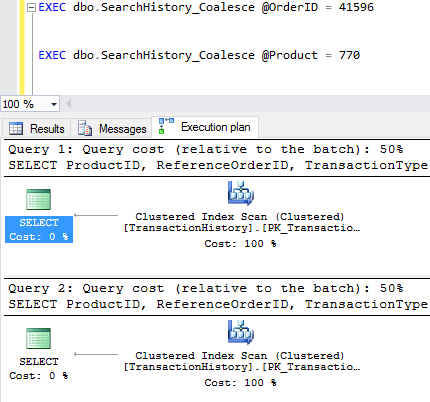

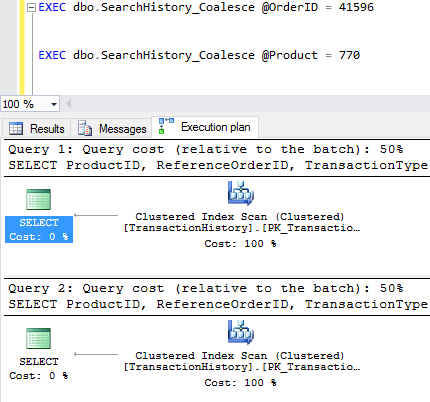

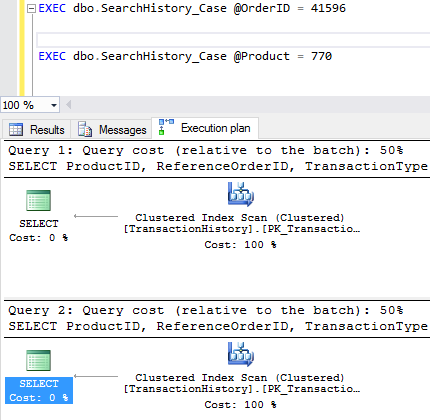

These both give us full table scans, rather than the index scan/key lookup we saw earlier. That means their performance will be predictable and consistent no matter what parameter values are used. Consistently bad, but at least consistent.

It’s also worth noting that neither of these will return correct results if there are NULL values in the columns used in the WHERE clause (because NULL != NULL). Thanks to Hugo Kornelis (b | t) for pointing this out.

And then two more forms that were mentioned in comments on the original post, slightly more complicated:

CREATE PROCEDURE SearchHistory_Case2

(@Product int = NULL, @OrderID int = NULL, @TransactionType char(1) = NULL, @Qty int = NULL)

AS

SELECT ProductID,

ReferenceOrderID,

TransactionType,

Quantity,

TransactionDate,

ActualCost

FROM Production.TransactionHistory

WHERE (CASE WHEN @Product IS NULL THEN 1

WHEN @Product = ProductID THEN 1

ELSE 0

END) = 1

AND (CASE WHEN @OrderID IS NULL THEN 1

WHEN @OrderID = ReferenceOrderID THEN 1

ELSE 0

END) = 1

AND (CASE WHEN @TransactionType IS NULL THEN 1

WHEN @TransactionType = TransactionType THEN 1

ELSE 0

END) = 1

AND (CASE WHEN @Qty IS NULL THEN 1

WHEN @Qty = Quantity THEN 1

ELSE 0

END) = 1

GO

CREATE PROCEDURE SearchHistory_Complex

(@Product int = NULL, @OrderID int = NULL, @TransactionType char(1) = NULL, @Qty int = NULL)

AS

SELECT ProductID,

ReferenceOrderID,

TransactionType,

Quantity,

TransactionDate,

ActualCost

FROM Production.TransactionHistory

WHERE ((ProductID = @Product AND @Product IS NOT NULL) OR (@Product IS NULL))

AND ((ReferenceOrderID = @OrderID AND @OrderID IS NOT NULL) OR (@OrderID IS NULL))

AND ((TransactionType = @TransactionType AND @TransactionType IS NOT NULL) OR (@TransactionType IS NULL))

AND ((Quantity = @Qty AND @Qty IS NOT NULL) OR (@Qty IS NULL))

These two give the same execution plans as the first form we looked at, index scan and key lookup.

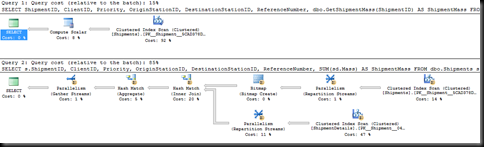

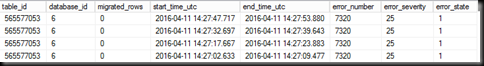

Performance-wise, we’re got two different categories of query. We’ve got some queries where the execution plan contains an index scan on one or other index on the table (depending on parameters passed) and a key lookup, and others where the execution plan contains a table scan (clustered index scan) no matter what parameters are passed.

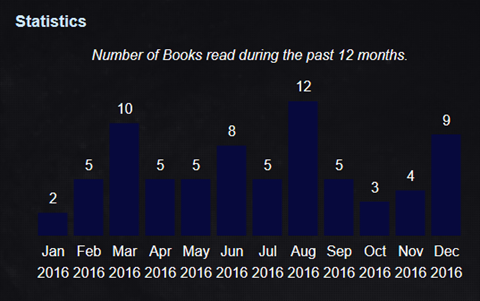

But how do they perform? To test that, I’m going to start with an empty plan cache and run each query form 10 times with just the OrderID being passed and then 10 times with just the ProductID being passed, and aggregate the results.

| Procedure |

Parameter |

CPU (ms) |

Duration (ms) |

Reads |

| SearchHistory |

OrderID |

5.2 |

50 |

271 |

|

ProductID |

123 |

173 |

340597 |

| SearchHistory_Coalesce |

OrderID |

7.8 |

43 |

805 |

|

ProductID |

9.4 |

45 |

805 |

| SearchHistory_Case |

OrderID |

12.5 |

55 |

805 |

|

ProductID |

7.8 |

60 |

804 |

| SearchHistory_Case2 |

OrderID |

10.5 |

48 |

272 |

|

ProductID |

128 |

163 |

340597 |

| SearchHistory_Complex |

OrderID |

7.8 |

40 |

272 |

|

ProductID |

127 |

173 |

340597 |

The query forms that had the clustered index scan in the plan have consistent performance. On large tables it will be consistently bad, it is a full table scan, but it will at least be consistent.

The query form that had the key lookup have erratic performance, no real surprise there, key lookups don’t scale well and looking up every single row in the table is going to hurt. And note that if I ran the queries in the reverse order on an empty plan cache, the queries with the ProductID passed would be fast and the queries with the OrderID would be slow.

So how do we fix this?

When I first wrote about this problem 7 years ago, I recommended using dynamic SQL and discussed the dynamic SQL solution in detail. The dynamic SQL solution still works very well, it’s not my preferred solution any longer however.

What is, is the RECOMPILE hint.

Yes, it does cause increased CPU usage due to the recompiles (and I know I’m likely to get called irresponsible and worse for recommending it), but in *most* cases that won’t be a huge problem. And if it is, use dynamic SQL.

I recommend considering the RECOMPILE hint first because it’s faster to implement and far easier to read. Dynamic SQL is harder to debug because of the lack of syntax highlighting and the increased complexity of the code. In the last 4 years, I’ve only had one case where I went for the dynamic SQL solution for a catch-all query, and that was on a server that was already high on CPU, with a query that ran many times a second.

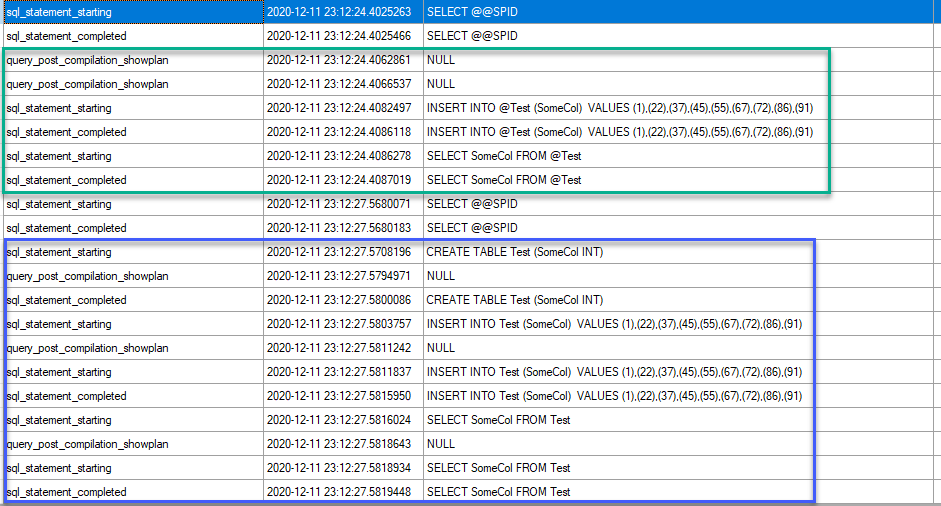

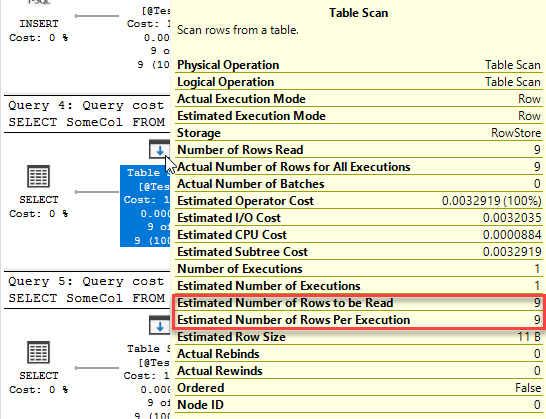

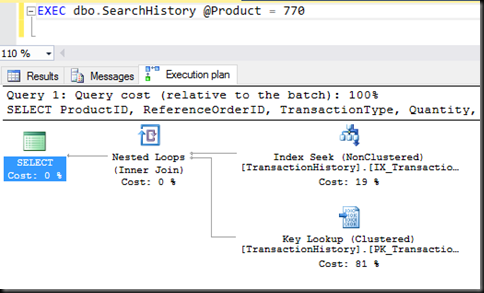

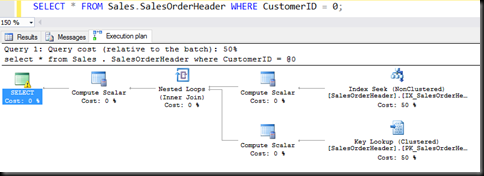

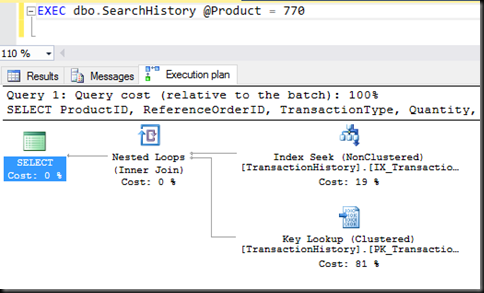

From SQL 2008 SP2/SQL 2008 R2 onwards, the recompile hint relaxes the requirement that the generated plan be safe for reuse, since it’s never going to be reused. This firstly means that the plans generated for the queries can be the optimal forms, index seeks rather than index scans, and secondly will be optimal for the parameter values passed.

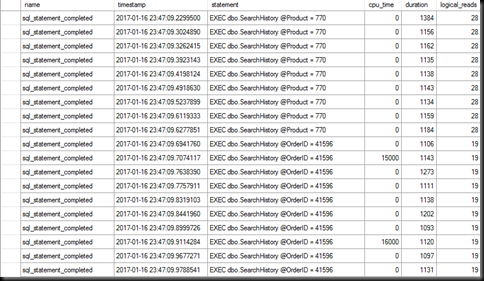

And performance-wise?

Reads down, duration down and CPU down even though we’re recompiling the plan on every execution (though this is quite a simple query, so we shouldn’t expect a lot of CPU to generate the plan).

How about the other forms, do they also improve with the RECOMPILE hint added? As I did before, I’m going to run each 10 times and aggregate the results, that after adding the RECOMPILE hint to each.

| Procedure |

Parameter |

CPU (ms) |

Duration (ms) |

Reads |

| SearchHistory |

OrderID |

0 |

1.3 |

28 |

|

ProductID |

0 |

1.2 |

19 |

| SearchHistory_Coalesce |

OrderID |

6.2 |

1.2 |

28 |

|

ProductID |

3.2 |

1.2 |

19 |

| SearchHistory_Case |

OrderID |

1.6 |

1.3 |

28 |

|

ProductID |

0 |

1.2 |

19 |

| SearchHistory_Case2 |

OrderID |

7.8 |

15.6 |

232 |

|

ProductID |

7.8 |

11.7 |

279 |

| SearchHistory_Complex |

OrderID |

1.5 |

1.4 |

28 |

|

ProductID |

0 |

1.2 |

19 |

What can we conclude from that?

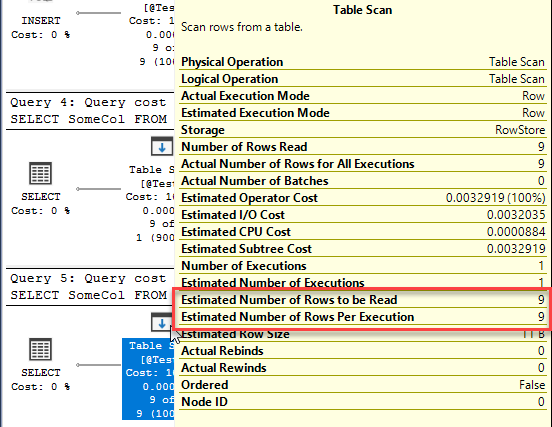

One thing we note is that the second form of case statement has a higher CPU, duration and reads than any other. If we look at the plan, it’s still running as an index scan/key lookup, despite the recompile hint.

The second thing is that the more complex forms perform much the same as the simpler forms, we don’t gain anything by adding more complex predicates to ‘guide’ the optimiser.

Third, the coalesce form might use slightly more CPU than the other forms, but I’d need to test a lot more to say that conclusively. The numbers we’ve got are small enough that there might well be measuring errors comparable to the number itself.

Hence, when this query form is needed, stick to the simpler forms of the query, avoid adding unnecessary predicates to ‘help’ the optimiser. Test the query with NULLs in the filtered columns, make sure it works as intended.

Consider the RECOMPILE hint first, over dynamic SQL, to make it perform well. If the query has long compile times or runs very frequently, then use dynamic SQL, but don’t automatically discount the recompile hint for fear of the overhead. In many cases it’s not that bad.