It’s no secret that I’m not overly fond of most user-defined functions. This isn’t just a pet hate, I have some good reasons for disliking them. All too often they’re performance bottlenecks, but that can be said about many things in SQL. The bigger problem is that they’re hidden performance bottlenecks that often go overlooked and ignored for too long.

I’m going to start with this fairly simple scalar function, created in the AdventureWorks database

Create function LineItemTotal(@ProductID int) returns money as begin declare @Total money select @Total = sum(LineTotal) from sales.SalesOrderDetail where productid = @ProductID return @Total end

So, given that function, the following two queries should be equivalent.

SELECT productid, productnumber, dbo.LineItemTotal(productid) as SumTotal FROM Production.Product p SELECT productid, productnumber, (select sum(LineTotal) from sales.SalesOrderDetail where productid = p.productid) AS SumTotal FROM Production.Product p

No problems so far. They both return 504 rows (in my copy of AW, which has been slightly padded out with more data). Now, let’s look at the execution characteristics by running them again with Statistics IO and Statistics Time on.

Query 1, the one with the scalar function:

Table ‘Product’. Scan count 1, logical reads 4, physical reads 0.

SQL Server Execution Times:

CPU time = 47297 ms, elapsed time = 47541 ms.

Query 2, the one with the correlated subquery:

Table ‘Worktable’. Scan count 0, logical reads 0, physical reads 0.

Table ‘SalesOrderDetail’. Scan count 3, logical reads 22536, physical reads 0.

Table ‘Product’. Scan count 3, logical reads 40, physical reads 0.SQL Server Execution Times:

CPU time = 1047 ms, elapsed time = 1249 ms.

There are two things to note here. Firstly the execution time. While the query with a correlated subquery used just over a second of CPU time, the query with the function used close to a minute of CPU time. But take a look at the IO statistics, not so much for what’s there, but for what’s not there. In the IO stats for the query with the user-defined function, there’s no mention of the SalesOrderDetail table at all.

The output of Statistics IO only shows the IO characteristics of the outer query, not of the function, so from here we have absolutely no idea what kind of IO impact that function is causing.

So that’s one problem, now what about the execution plan? From the CPU and elapsed times, it’s pretty clear that the query with the function is far more expensive than the query without.

The costings on those plans are so far off as to be completely useless. The query that takes 1 sec of CPU time is apparently 100% of the cost of the batch and the one that takes 47sec of CPU time is apparently 0% of the cost of the batch. Somehow I don’t think that’s right. There’s also no indication whatsoever as to what the funtion is doing. The user-defined function is represented by the Compute Scalar operator in the first plan.

Again, there’s no indication at all as to the true cost of that function. Estimated I/O cost of 0? I somehow doubt it.

This is the main reason I have a problem with user-defined functions, the way they interact with both the query stats and the execution plan makes it very difficult to see what their impact really is when one’s doing performance testing. Someone who’s not very familiar with the intricacies and nuances of SQL, or who’s just using the exec plan without examining anything else may mistakenly conclude that user-defined functions perform well.

So, how do you actually see what a query that uses a function is actually doing? The only way is to haul out profiler and run a trace.

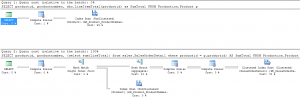

I’m going to start with just a trace on T-SQL:BatchCompleted, and I’m going to run those two queries separately to see if I can get a more accurate picture of the IO impact.

The profiler trace shows a very different picture in terms of IOs than Statistics IO did.

![]()

20000 reads for the query with the subquery, 4 and a half million for the query with the function. Ouch.

But wait, there’s more.

I’m going to run that profiler trace again, but this time I’m going to include the SP:Completed event.

Running the query with the function resulted in 505 events picked up by profiler. One was the batch completed as the entire query completed its execution. the other 504 were all SP:Completed events. As I noted earlier, the query that I’m running here returns 504 rows.

The function is being executed once for each row in the query. That’s why the duration of the query is so high (each execution of the function takes between 70 and 140 ms) and it’s why the reads are so exceedingly high. Each time the function executes it’s (in my case) doing a table scan of the SalesOrderDetail table (I have no index on ProductID). If an index is added on that column, the performance of the function becomes much better, but that may not always be the case.

Now this was a fairly simple function. Imagine if the function was a few hundred lines of T-SQL with multiple queries in it. Not a pleasant thought.

Great article, thanks for posting this. I wish I had known this ages ago!

One minor correction, you’ve referenced the 2 sample queries in reverse order when you discuss the execution characteristics. Query 1 calls the scalar function, and Query 2 uses the correlated subquery.

Thanks, I’ll fix it.

I meant to change the order that they were listed in the code block.

i knew about this for functions but i’ve noticed this behaviour with some CTE’s too. all this totally wrecked my confidence in set statistics io.

Pingback: SQL Server and Cloud Links for the Week | Brent Ozar - SQL Server DBA

Recursive CTE’s by any chance?

From what I’ve heard, and I haven’t played with them much myself, with a recursive CTE the exec plan and Stats IO are for the first (anchor) query only, not the recursion.

no, they’re not recursive. i do however have calls from cte6 to cte2 and cte3 etc…

That’s not good. Normal CTE’s are *supposed* to behave like views, not like this.

i’ll post a blog about it in a very near future… a bit busy at the moment.

Looking forward to it.

While scalar UDFs may be a huge drag, the inline ones usually aren’t. Just wanted to emphasize the distintion between scalar and inline UDFs:

http://sqlblog.com/blogs/alexander_kuznetsov/archive/2008/04/21/not-all-udfs-are-bad-for-performance.aspx

Yeah, I’m specifically and only talking about the scalar UDFs here. The inline table valued functions are, in fact, the only ones that perform well

Just wanted to say thanks for this great blog post. I’ve always known the perf drawbacks of UDFs, but then a skeptical dev here asked me to prove it. It was only then that I realized that Exec Plans don’t show everything. Dug around via Google to try and find the correct Profiler settings, and found your blog post which answered my questions! Thanks!

Pingback: Beware misleading data from SET STATISTICS IO

Pingback: Check Constraints in SQL Server and First Order Logic | Cold Logics

in my opinion, the logic in a UDF function can be “tested” by creating an sp, or inline SQL with all of the same function logic and parameeters – and then using statistics IO, showplan on the sp, inline sql.

So we are in 2019 and the SQL 2017 behaves exactly the same way…

Yes it does. SQL 2019 does introduce some changes.