It’s not uncommon to find stored procedures that have multiple IF statements controlling the flow of execution within the procedure. Now this seems to be a fairly logical thing to do, but there can be a subtle performance problem with this, one that may be hard to identify.

Let’s have a look at a simple example (using AdventureWorks)

CREATE PROCEDURE MultipleExecPaths ( @TransactionType char(1) = NULL ) AS IF @TransactionType IS NULL SELECT max(transactionDate) from Production.TransactionHistory ELSE SELECT max(transactionDate) from Production.TransactionHistory WHERE TransactionType = @TransactionType GO

Nice and simple. If the parameter is passed, get the latest date for that transaction type, if the parameter is not passed, ie is null, get the latest date over all transaction types. So what’s wrong with this?

The problem goes back to parameter sniffing. When the procedure is first executed the first time all queries in the procedure are parsed, bound and optimised. When the optimiser processes each statement to generate an execution plan it uses the values passed for the various parameters to estimate the number of rows affected. The number of rows that the optimiser thinks the queries will process affects the choice of operators for the plan. Operators that are optimal for small numbers of rows are not always optimal for large numbers of rows, and sometimes the difference can be astounding.

Let’s see how the example above plays out to understand what’s happening here.

First thing I want to do is to run the two queries in that procedure separately to see how they behave in isolation. After that I’ll run the procedure and see how it behaves, both when the first call passes the parameter and when the first call doesn’t pass a parameter.

SELECT max(transactionDate) from Production.TransactionHistory GO SELECT max(transactionDate) from Production.TransactionHistory WHERE TransactionType = 'W'

I’m hardcoding the parameter value so that we get much the same affect as we would with a parameter in a stored proc. If I used a variable, SQL wouldn’t be able to see the value of the variable at compile time and hence we wouldn’t necessarily get the same plan as we would in the procedure.

The first query, the one without a where clause executes with a clustered index scan. This isn’t surprising, there’s no index on the TransactionDate column. Execution statistics are

Table ‘TransactionHistory’. Scan count 1, logical reads 3165, physical reads 0. SQL Server Execution Times: CPU time = 125 ms, elapsed time = 131 ms.

The second query, with the filter on TransactionType also executes with a clustered index scan, even though there’s an index on TransactionType. Execution statistics are

Table ‘TransactionHistory’. Scan count 1, logical reads 3165, physical reads 0. SQL Server Execution Times: CPU time = 110 ms, elapsed time = 111 ms.

Note that I have more data in my copy of AdventureWorks than is normal, so the execution times and IO statistics are higher than they would be with a normal copy of AW.

Why the clustered index scan when there’s an index? The index on TransactionType is not covering, it doesn’t have the TransactionDate column in it, and the filter on TransactionType returns 125000 rows out of the total of 450000 in the table. That’s 27% of the table, far too high for the optimiser to consider a seek on a non-covering index and a whole load of key lookups.

So when run separately the two queries both execute in around 100ms and do just over 3000 reads. Now let’s see how they behave together in the stored procedure.

First test is going to be calling the procedure first time with a parameter

EXEC MultipleExecPaths @TransactionType = 'W' GO EXEC MultipleExecPaths

First Procedure call

Table ‘TransactionHistory’. Scan count 1, logical reads 3165, physical reads 0. SQL Server Execution Times: CPU time = 109 ms, elapsed time = 117 ms.

Second procedure call

Table ‘TransactionHistory’. Scan count 1, logical reads 3165, physical reads 0. SQL Server Execution Times: CPU time = 109 ms, elapsed time = 111 ms.

This looks fine. The execution statistics and execution plan are the same as in the case where I ran the two statements. Next test, I’m going to clear the procedure cache out and run the two procedures again, this time in the other order.

DBCC FREEPROCCACHE GO EXEC MultipleExecPaths GO EXEC MultipleExecPaths @TransactionType = 'W'

First Procedure call:

Table ‘TransactionHistory’. Scan count 1, logical reads 3165, physical reads 0. SQL Server Execution Times: CPU time = 109 ms, elapsed time = 111 ms.

Second procedure call:

Table ‘TransactionHistory’. Scan count 1, logical reads 372377, physical reads 0. SQL Server Execution Times: CPU time = 265 ms, elapsed time = 266 ms.

Oops. What happened here? The reads have gone through the roof and the query duration has more than doubled. Let’s have a look at the execution plan, see if there’s a hint there.

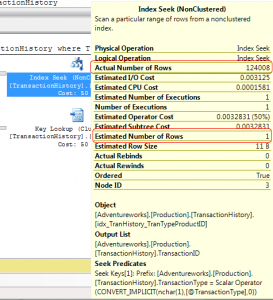

Remember I said earlier that since the filter on transaction type affected a large percentage of the table, the optimiser wouldn’t chose an index seek with a key lookup? Well, that’s exactly what it’s done here. Question is, why. There’s a partial answer in the properties of the index seek

Estimated rows 1. Actual rows 124008. Yeah, that’s going to mess up the choice of plan. A quick dig into the xml plan gives a very large clue as to what’s going on here.

<ParameterList> <ColumnReference Column="@TransactionType" ParameterCompiledValue="NULL" ParameterRuntimeValue="'W'" /> </ParameterList>

ParameterCompiledValue=”NULL”, ParameterRuntimeValue=”‘W'”. Since no rows will ever satisfy the condition TransactionType = NULL (assuming default ansi_null settings), the optimiser compiled the plan for 1 row.

What’s happened here is that when the procedure was run the first time and the optimiser generated the execution plan, it optimised all of the queries in the procedure based on the values of the parameters passed for that execution, regardless of whether the query could be executed with that particular set of parameters. So in this case, when the procedure was first executed and the execution plan was generated, it was generated for both queries based on a parameter value of NULL, even though the second branch of the IF could not be reached with that parameter value.

The important thing to take away from this is that when a procedure is compiled, the entire thing is compiled and all queries in it are optimised based on the parameter values for that call. If some queries will only be executed for certain parameter values then it can be that those queries will get very sub-optimal plans.

Great. We know the cause of the problem. What’s the fix?

Well, there are several options. The usual fixes for parameter sniffing can work here, the use of the OPTION (RECOMPILE) or OPTION (OPTIMISE FOR…) hints are certainly useful. If a query has OPTION(RECOMPILE) then its plan is never cached and so will always be compiled with an appropriate parameter value. OPTIMISE FOR can be used to override the optimiser’s parameter sniffing, regardless of what the actual parameter value is, the optimiser will use the hinted value instead.

There’s also another solution for this problem, one I personally favour. Sub-procedures. Because a stored procedure is only compiled when it’s executed, moving the contents of the branches of the IF statement into separate procedures and calling those procedures from the branches of the IF completely eliminate the chance of an inappropriate plan caused by this problem, and also prevents the optimiser from doing unnecessary work (optimising the entire procedure when only part will be run). So using this solution, the original procedure could be modified to this.

CREATE PROCEDURE MaxDateNoFilter AS SELECT max(transactionDate) from Production.TransactionHistory GO CREATE PROCEDURE MaxDateWithFilter ( @TransactionType char(1) -- doesn't need null default, because will not be called with null ) AS SELECT max(transactionDate) from Production.TransactionHistory WHERE TransactionType = @TransactionType GO CREATE PROCEDURE MultipleExecPaths ( @TransactionType char(1) = NULL ) AS IF @TransactionType IS NULL EXEC MaxDateNoFilter ELSE EXEC MaxDateWithFilter @TransactionType GO

Now each sub-procedure gets it’s own separate cached plan and no matter how the outer procedure is called the first time, the plans will be optimal for the parameters that the sub-procedures are actually executed with.

Edit: If you’re trying to reproduce my results, make sure there’s an index on TransactionType. Without that, all queries execute with a tablescan.

CREATE NONCLUSTERED INDEX [IX_TransactionHistory_TransactionType] ON [Production].[TransactionHistory] ([TransactionType] ASC)

Great, I like the sub-procedure way, and like to start incoporate it in my code. We were just talking about it over lunch.

Nice post Gail. I like sub procedures as well. The other option, not necessarily the best in this case, is Dynamic SQL using sp_executesql. Of course Dynamic SQL brings it’s own set of issues.

Sub-procedures, interesting idea. I’d be wary of it though, because it duplicates code even more. And separating them means some later coder might not recognize they are related.

Excellent example. I learn from these.

I don’t agree that it necessarily duplicates code. If it does, then originally there would have been one large proc with near-identical queries repeated again and again. If that’s the case, the code was already duplicated, just duplicated within one procedure instead of spread among several procedures

It’s much the same as in a front-end language. A function (say in C#) that has near-identical code chunks repeated again and again in various branches of if statements should probably be refactored into smaller functions.

We ran into this a couple of years back and used sub procs as well to fix it. The query time dropped from 1s to under 40ms. Dynamic SQL was ruled out by the coding standards policy, and as I’m not a fan anyway, I didn’t mind!

P.S. Thanks for an interesting user group presentation this evening.

Great article Gail. I had not previously considered using sub-procedures so this presents a very interesting alternative indeed.

Hi Gail

What about taking the original proc, taking out the IF etc and just having this one line?:

SELECT max(transactionDate) from Production.TransactionHistory where isnull(TransactionType,@TransactionType) = @TransactionType

That’s a guaranteed table/index scan. The function on the column will prevent index seeks. On the plus side, all calls will perform consistently. It’s also not equivalent to the procedure as it’s checking for null in the column whereas the proc was checking for null in the parameter. Equivalent would be

SELECT max(transactionDate) from Production.TransactionHistory where TransactionType = isnull(@TransactionType,TransactionType)

That also tends to table/index scan. Also see a blog post I wrote a couple months back called ‘Catch-all queries’ (https://www.sqlinthewild.co.za/index.php/2009/03/19/catch-all-queries/)

A change like that may be of use in the specific case that I used as an example. Imagine a case where the different branches also had slightly different queries, joining in other tables, filtering on different columns with different parameters. A case I saw of this a couple years back either joined TableA and TableB with a filter on TableB or joined TableA to TableC with a filter on TableC, depending what parameters were passed.

Gail, I’ve used sub-procs like this is the past to prevent bad plans too, but just wondered, would you set the master proc ‘MultipleExecPaths’ to always recompile also?

No. There’s no queries in the master proc in this example, hence there’s nothing to compile. Remember compiles are only done to select, insert, update, delete statement. If there are queries within the master proc then, if they’re to stay in the master, they should execute the same regardless what it’s called with, if they don’t then they’re candidates to be moved to subprocs as well.

Good article Gail. I really liked the analysis/presentation you did. Using sub procedures will help provided if we have fewer branches. If we are using too many procedures, maintenance will be a problem.

Pingback: Something for the Weekend: SQL Server Links 18/09/09 | John Sansom - SQL Server DBA in the UK

Having come from a C++ background, I instinctively use sub procedures. Now I know it’s not a bad habit after all. Thanks.

Avoiding recompiles in conditional stored procs via sub-procs is a technique I used in the 6.5 through 2000 days, but I didn’t have a chance to cycle back and see if it still applies for SS2K5 with the new statement-level recompilation feature.

I haven’t yet been able to convince myself that the sub-proc technique is still necessary…

What version and build of SQL Server are you using? I’m unable to duplicate the behavior using SS2K5 Developer Edition 9.00.4035.00 (SP3) on an x86.

I artificially increased the size of the table to 6.8 million rows (copies of itself over and over). Was there any other manipulation you did for the data distribution? Any changes to the indexing as deployed in the AW sample?

Thanks,

TroyK

SQL 2008. 2005 behaves exactly the same way. I did this as a presentation, used SQL 2008 twice and 2005 once.

The point is not avoiding recompiles, the point is that SQL doesn’t recompile and continues using a cached plan even in cases when it’s highly inefficient.

Make sure there is an index on TransactionType. I’m not sure if there’s one on that column in the default adventureworks. Without an index, you’ll get a tablescan no matter what because there’s no other way to evaluate the query

USE [Adventureworks]

GO

CREATE NONCLUSTERED INDEX [IX_TransactionHistory_TransactionType] ON [Production].[TransactionHistory]

([TransactionType] ASC)

Thanks for the additional information about the index. I had guessed that there must be one in your example, and defined one that used the TransactionType for the index key, but additionally included the TransactionDate. This has the effect of eliminating the key lookup in the suboptimal plan, so the reads don’t go through the roof as in your example.

So the 2nd recommendation that I hope your readers will keep in mind is to be sure your indexing design is optimal for the types of queries you’re running.

Bear in mind this was a simplified example. It’s possible to get this problem when all indexes are covering, eg through inappropriate joins or other operators. It’s not just an indexing question.

It’s just a bit harder to generate such an example, which is why I stuck to something simple.

What happens if the there are more than one parameters which can have multiple combinations of values while calling the master proc? We can’t have n child procs for n number of combinations if n is a large number!

If you’ve got a proc with multiple if-else branches, based of multiple parameters then you are probably way better off breaking it down into multiple smaller procs. Not sure why you’re concerned with the numbers, the same number queries would exist in the single proc, it’s just a matter of moving them elsewhere.

Great Blog!……There’s always something here to make me laugh…Keep doing what ya do 🙂

I worry about the recompile option because in a high load (hundred calls per sec) the time and processor spent to recompile could be big ( agree or not ?!).

The option to have more procedures is the right choice IMO.

As always, it depends. 🙂

If a proc is running many times a second you do not want it recompiling on each execution. The overhead will be unpleasant. If the proc is running once a day the recompile overhead is far less of a problem.

Hi Gail

Since it’s an onld post, I am not sure if it will be replied. But I do remember using and reading somewhere that to avoid parameter sniffinf, we can receive the passed parameters in variables declared inside the proc and then use those variables instead of hardcoaded values. Isn’t that true? Use of seprate proc for each IF statement somewhat looks intimidating to me if my proc has many if statements.

Yes, but….

By doing that you get a plan for the average case, not necessarily the best case.

https://www.sqlinthewild.co.za/index.php/2008/02/25/parameter-sniffing-pt-2/

Rory said this on September 17th, 2009 at 19:50

SELECT max(transactionDate) from Production.TransactionHistory where isnull(TransactionType,@TransactionType) = @TransactionType

Gail said this on September 18th, 2009 at 00:19

That’s a guaranteed table/index scan. The function on the column will prevent index seeks.

I’m definitely up to your level of expertise, so I would appreciate it if you could explain what happens when the function is not used, i.e.

SELECT MAX(transactionDate)

FROM Production.TransactionHistory

WHERE @TransactionType IS NULL

OR TransactionType = @TransactionType

Regards

See https://www.sqlinthewild.co.za/index.php/2009/03/19/catch-all-queries/

How would this work if you have more filter parameters?

Suppose you have 5 optional parameters.

If you want to prevent the “WHERE @TransactionType IS NULL OR TransactionType = @TransactionType” checks, then there are 32 variations.

We have a search UI with 9 possible parameters, that would give 512 different variations.

In that case, you probably want to look at this post instead: https://www.sqlinthewild.co.za/index.php/2009/03/19/catch-all-queries/

I have a project where the SQL processing code is identical for 50 tables, with only the table name and the PK field name changing. Right now, I’m running 50 “code chunks” to process the database. It’s moving data from one database to another. My first thought was one function with two parameters: the table name and the PK field name. But it looks like multiple stored procedures would be more efficient.

Since each “code chunk” is running SELECTs, INSERTs and DELETEs, should I pull out each SQL statement (SELECTs, INSERTs and DELETEs) into its own sub-procedure, using the table name and PK field names as parameters? I’ve considered table value functions, but as a function, it looks like it wouldn’t be the way to go.

I hope my explanation was clear enough to give you an idea what I’m trying to do. As a final stab at this, here are code examples that run in every “code chunk” that I want o run in parameterized stored procedures or UDFs:

–==== [table_A] would be replaced by a parameter with the table name: @table_A. [composite_key1]

–==== (@composite_key1) would be the PK field that would change. [composite_key2] never changes.

–==== Getting the count of records in the [DATABASE2].[dbo].[table_A] table:

;WITH [RecordCountCTE] AS (

SELECT * FROM [DATABASE2].[dbo].[table_A]

)

SELECT @record_count = COUNT(*) FROM [RecordCountCTE]

PRINT ‘Beginning record count for [DATABASE2].[dbo].[table_A]: ‘ + CAST(@record_count AS varchar(30))

–==== The tables [DATABASE1].[dbo].[table_A] and [DATABASE2].[dbo].[table_A] are identical in structure.

–==== The purpose of this DELETE is to remove any duplicated records between the two database tables.

–==== This DELETE lives in a WHILE loop. Since there is the possibility of needing to delete literally

–==== millions of records, deleting records in 100,000 record chunks avoids filling up the transaction

–==== log and killing the process:

WHILE @continue = 1

BEGIN

BEGIN TRANSACTION

DELETE TOP (100000) FROM [DATABASE1].[dbo].[table_A]

WHERE EXISTS (

SELECT TOP (100000) * FROM [DATABASE1].[dbo].[table_A] [t1]

INNER JOIN [DATABASE2].[dbo].[table_A] [t2] ON [t1].[composite_key1] = [t2].[composite_key1]

AND [t1].[composite_key2] = [t2].[composite_key2]

WHERE [t1].[composite_key1] = [t2].[composite_key1]

AND [t1].[composite_key2] = [t2].[composite_key2]

ORDER BY [t1].[composite_key1]

,[t1].[composite_key2]

)

SET @row_count = @@ROWCOUNT

IF @row_count > 0

BEGIN

SET @record_count = @record_count + @row_count

PRINT ‘Running total record count: ‘ + CAST(@record_count AS varchar(20))

END

ELSE

BEGIN

SET @continue = 0

SET @record_count = @record_count + @row_count

PRINT ‘Final total record count: ‘ + CAST(@record_count AS varchar(20))

END

COMMIT

END

Please post this on one of the SQL forums. Blog comments aren’t a good place for this.

You’re right. I sometimes get tunnel-vision and don’t consider things like that! Thanks much, and I appreciate your blog.