This is going to be a quick one…

I keep seeing forum code (and production code) that includes the DISTINCT in IN or EXISTS subqueries. The rationale is given either as a performance enhancement or as necessary for correct results.

Is it necessary or useful? Only one way to find out.

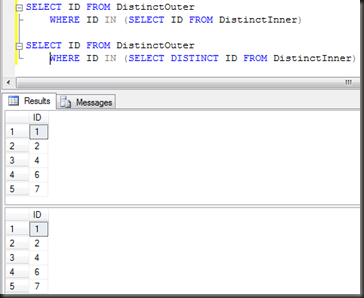

Let’s check for correct results first, because that can be done with nice small tables.

CREATE TABLE DistinctOuter ( ID INT ); CREATE TABLE DistinctInner ( ID INT ); INSERT INTO DistinctOuter VALUES (1), (2), (3), (4), (5), (6), (7), (8) INSERT INTO DistinctInner VALUES (1), (2), (2), (2), (2), (4), (6), (7)

No difference there, results are the same. I’m not going to run the test for EXISTS because, if anyone remembers how EXISTS works (or remembers a blog post I wrote a while back), EXISTS doesn’t depend on what’s in the SELECT clause at all, it just looks for existence of rows, and DISTINCT cannot remove unique rows, just duplicates.

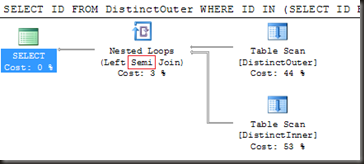

A look at the execution plan shows why there are no duplicate values returned in the first query (the one without DISTINCT).

That’s a semi-join there, not a complete join. A semi-join is a join that just checks for matches but doesn’t return rows from the second table. Since it’s just a check for existence, duplicate rows in the inner table are not going to make any difference to the results.

So that answers the correctness aspect, distinct is not necessary to get correct results. But does it improve performance by having it there? Or does it perhaps reduce the performance? Time for larger tables.

Stolen from my last look at EXISTS and IN:

CREATE TABLE PrimaryTable_Large ( id INT IDENTITY PRIMARY KEY, SomeColumn char(4) NOT NULL, Filler CHAR(100) ); CREATE TABLE SecondaryTable_Large ( id INT IDENTITY PRIMARY KEY, LookupColumn char(4) NOT NULL, SomeArbDate Datetime default getdate() ); GO INSERT INTO PrimaryTable_Large (SomeColumn) SELECT top 1000000 char(65+FLOOR(RAND(a.column_id *5645 + b.object_id)*10)) + char(65+FLOOR(RAND(b.column_id *3784 + b.object_id)*12)) + char(65+FLOOR(RAND(b.column_id *6841 + a.object_id)*12)) + char(65+FLOOR(RAND(a.column_id *7544 + b.object_id)*8)) from msdb.sys.columns a cross join msdb.sys.columns b; INSERT INTO SecondaryTable_Large (LookupColumn) SELECT SomeColumn FROM PrimaryTable_Large TABLESAMPLE (25 PERCENT);

Some row counts first.

- Total rows in PrimaryTable_Large: 1000000

- Total rows in SecondaryTable_Large: 256335

- Total distinct values in LookupColumn in SecondaryTable_Large: 10827

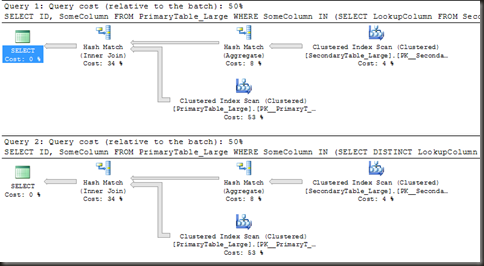

First test is without indexes on the lookup columns:

SELECT ID, SomeColumn FROM PrimaryTable_Large WHERE SomeColumn IN (SELECT LookupColumn FROM SecondaryTable_Large) SELECT ID, SomeColumn FROM PrimaryTable_Large WHERE SomeColumn IN (SELECT DISTINCT LookupColumn FROM SecondaryTable_Large)

The reads are identical, which shouldn’t be a surprise as there’s no way with the current tables to run those queries without doing a full table scan.

Table ‘Worktable’. Scan count 0, logical reads 0, physical reads 0.

Table ‘PrimaryTable_Large’. Scan count 1, logical reads 14548, physical reads 0.

Table ‘SecondaryTable_Large’. Scan count 1, logical reads 798, physical reads 0.

For durations and CPU, I’m going to run each 10 times and aggregate the results from the profiler T-SQL:BatchCompleted event. The results are just about identical.

- IN without DISTINCT: CPU 1.21 seconds, duration 12.2 seconds

- IN with DISTINCT: CPU 1.25 seconds, duration 11.9 seconds

Furthermore, the execution plans are identical. Something interesting to notice in this case is that the join is not a semi-join, it’s a complete join and to ensure that the complete join doesn’t return duplicate rows (which would be incorrect), there’s a hash match (aggregate) right before the join that’s removing duplicate rows from the inner resultset, and that’s present in both execution plans, when the distinct is specified and when it’s not.

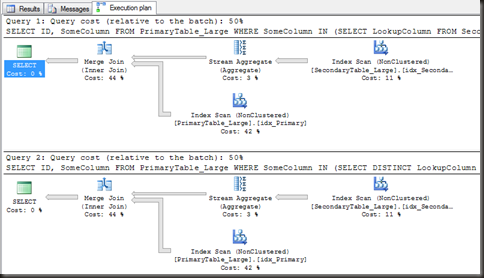

One last question to answer – does the presence of indexes change anything?

CREATE INDEX idx_Primary ON dbo.PrimaryTable_Large (SomeColumn) CREATE INDEX idx_Secondary ON dbo.SecondaryTable_Large (LookupColumn)

The execution plan has changed, in operators if not in general form. The hash join is replaced by a merge join (still a complete join, not a semi-join), the hash match (aggregate) has been replaced by a stream aggregate and the clustered index scans are now (nonclustered) index scans

The reads are still identical between the two, which should be no surprise at all. As for the durations:

- IN without DISTINCT: CPU 0.82 seconds, duration 10.1 seconds

- IN with DISTINCT: CPU 0.79 seconds, duration 10.5 seconds

Again so close that the small difference should be ignored.

So in conclusion, is there any need or use for DISTINCT in the subquery for an IN predicate? By all appearances, none whatsoever. The SQL query optimiser is smart enough to ignore the specified DISTINCT if it’s not necessary (as we saw in the first example) and to add an operator to remove duplicates if it is necessary (as we saw in the 2nd and 3rd examples), regardless of whether or not there’s a DISTINCT specified in the query.

Nice explanation Gail

Interesting. Is there any instance in which DISTINCT does provide a noticeable advantage?

Claire, I would imagine not. The optimiser’s smart enough to know that it can ignore the DISTINCT if it’s going to do a semi-join and to know that it requires one if doing a full join and so add some form of duplicate-removing operator whether or not there’s a distinct specified.

While I didn’t show the execution plan for the first tiny test for both queries, they were actually identical. The optimiser ignored the DISTINCT completely

Thanks for this detailed explanation.

This actually has some history. Early SQLs simply went down the list in the order it was written. So it paid to sort the list based on expected hits. If the list was short, this was not much of a gain, but it could matter back.

Later, optimizers would look at a list of a certain size and take other actions. The most common one was to sort the values, put them into a binary tree and do a search. A slightly fancier was to set up a hash table. This lead to the unexpected effect that adding some non-sense values to the list to pad it out to the critical size would suddenly jump performance.

I have a semi-related idea, but will hold off the full explanation of it here (for the sake of courtesy), but be it safe to say that it seems that the Query Optimizer is not going to penalize someone for old school coding.

My instincts tell me that my idea to take one of my .NET guy’s reports and turning it into INNER JOINS from WHERE’s is still a much better way to go, but you have motivated me to do a little more testing to see if there is a truly significant difference.

Great stuff and keep up the awesome work!

If you mean change this

FROM a, b where a.id = b.id

into

FROM a INNER JOIN b ON a.id = b.id

then it will have absolutely not effect. The queries are identical, the plans will be identical. The ‘newer’ style is easier to read (IMHO), less likely to miss a join and is the only way to do an outer join now, but it’s not faster.