To TOP or not to TOP an EXISTS

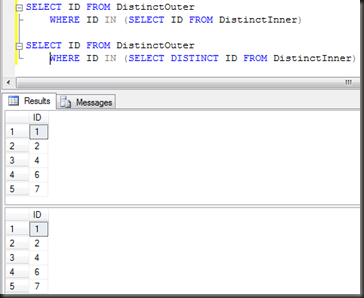

Earlier this year I had a look at a query pattern that I often see on forums and in production code, that of the Distinct within an IN subquery. Today I’m going to look at a similar patters, that being the use of TOP 1 within an EXISTS subquery.

Three tests. First a straightforward exists with no correlation (no where clause linking it to an outer query). Second, an exists with a complex query (one with a non-sargable where clause and a group by and having). Third an exists subquery correlated to the outer query.

Table structures are nice and simple, in fact, for ease I’m going to use the same tables as I did back on the exists, in and inner join tests. Code to create and populate the tables it attached to the end of the post.

First up, a simple exists query, in an IF, just to be different.

IF EXISTS (SELECT 1 FROM PrimaryTable_Medium) PRINT 'Exists' IF EXISTS (SELECT TOP (1) 1 FROM PrimaryTable_Medium) PRINT 'Exists too'

For a benchmark, a SELECT 1 FROM PrimaryTable_Medium has the following IO characteristics

Table ‘PrimaryTable_Medium’. Scan count 1, logical reads 89, physical reads 0.

SQL Server Execution Times:

CPU time = 15 ms, elapsed time = 510 ms.

Ignore the elapsed time, that’s likely mostly from displaying the records. I’m going to focus mostly on the CPU and IO.

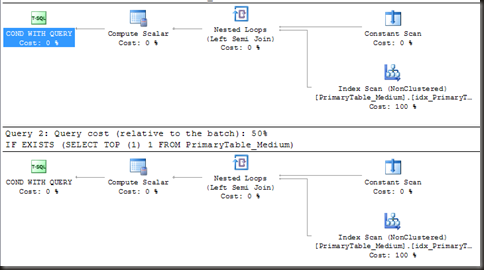

Execution plans of the two exists variations are absolutely identical.

The index operators are scans because there is no way they could be anything else, there’s no predicate so a seek is not possible. That said, it’s not a full index scan. The properties of the Index Scan show 1 row only (actual and estimated). So SQL did not read the entire index, just enough to evaluate the EXISTS, and that’s what it did in both cases. IO stats confirm that.